QSAR of Small Molecule Drugs: The Use of Neural Networks

Quantitative Structure-Activity Relationships (QSAR), the cornerstone of modern drug discovery, has relied on linear models to predict drug activity based on molecular structure. While this has had its benefits, neural networks -particularly Graph-based Neural Networks (GNNs) like Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs) – are showing promise at capturing complex, non-linear relationships within molecular data. By learning directly from raw molecular structures, neural networks can reveal subtle patterns and interactions that simpler models might miss. In this article, we look at how GNNs represent a powerful tool in QSAR analysis.

QSAR in Drug Discovery: Modeling Molecular Complexity

The heart of QSAR lies in its predictive models, which establish correlations between these chemical descriptors and the compound’s biological activity or property of interest. These models act as a filter, enabling virtual screening of the enormous chemical libraries. The virtual screening process efficiently narrows down the vast chemical space to a manageable set of compounds that are likely to exhibit the desired activity. This approach significantly reduces the need for extensive and costly experimental testing, as it identifies “confirmed actives” (potentially therapeutic) and “confirmed inactives” (non-toxic) compounds with higher precision.

At a more granular level, QSAR modeling involves a direct relationship between compound structures and their quantitative activity measures. Molecular structures, often represented as 2D sketches or more complex 3D conformations, are systematically analyzed to extract relevant descriptors. These descriptors might include physicochemical properties, topological indices, or electronic parameters. The activity data, typically expressed as numerical values (e.g., IC50, Ki, or other standardized measures), are then correlated with these descriptors to build predictive models.

The representation of chemical structures in computational systems is crucial for effective QSAR modeling. Molecules are commonly depicted as graphs, where atoms serve as vertices and chemical bonds as edges. This graph-based representation allows the calculation of numerous molecular indices and descriptors, which form the basis for quantitative comparisons between different compounds. The molecular information is often stored in specialized file formats, such as MOL files, which contain detailed information about atomic coordinates, bond types, and connectivity tables.

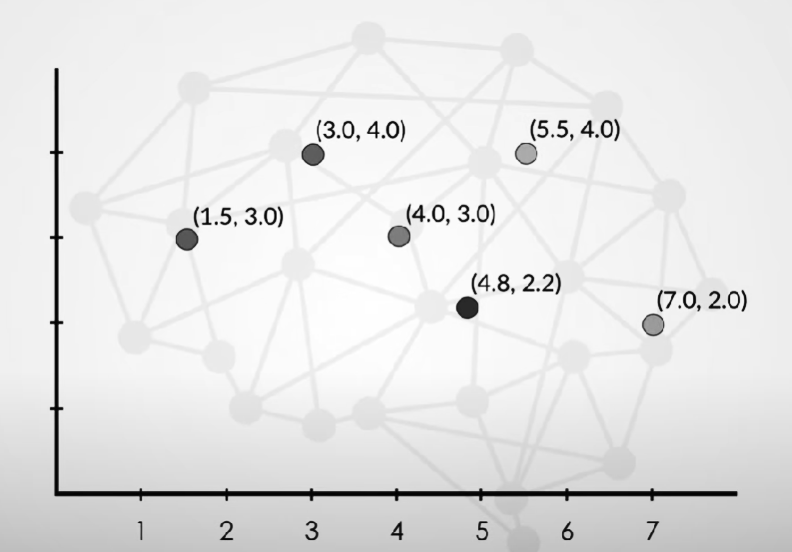

In the realm of advanced QSAR techniques, compounds are conceptualized as vectors in a multidimensional descriptor space. Each dimension in this space corresponds to a specific molecular descriptor or property. For instance, a compound M might be represented by a vector RM = RM(xM1, xM2, …, xMK), where each xMi represents a distinct molecular feature. This vectorial representation enables researchers to quantify similarities between compounds, identify structural patterns correlated with activity, and make predictions about the properties of novel, untested compounds. This multidimensional approach thus forms the foundation for many modern QSAR methodologies.

| Samples (Compounds) | Variables (descriptors) | |||

|---|---|---|---|---|

| Compound | X₁ | X₂ | … | Xₘ |

| 1 | X₁₁ | X₁₂ | … | X₁ₘ |

| 2 | X₂₁ | X₂₂ | … | X₂ₘ |

| … | … | … | … | … |

| n | Xₙ₁ | Xₙ₂ | … | Xₙₘ |

QSAR modeling remains incredibly challenging due to the vast diversity of molecular structures and their corresponding biological activities. Moreover, the risk of overfitting, where a model becomes too tailored to the training data and performs poorly on new data, poses a significant obstacle. This is especially problematic in drug discovery, where models must generalize well to predict the efficacy and safety of novel compounds. Addressing these challenges requires careful model selection, robust validation techniques, and a deep understanding of both chemistry and biology.

Understanding Graph-Based Neural Networks: Advancing Beyond Linear QSAR Models

So we understand how modern QSAR modeling relies heavily on ‘capturing’ the chemical structure interrelationships. Technically, these can be quite easily modeled as multivariate linear regressions, known as linear QSAR models. These models assume a straightforward linear correlation between molecular descriptors (such as physicochemical properties, topological indices, and electronic parameters) and biological activity, offering simplicity, interpretability, and computational efficiency. The basic form of these models are; Y = a0 + a1X1 + a2X2 + … + anXn, where Y represents biological activity and X1, X2, etc., are molecular descriptors. However, they face significant challenges in capturing the full complexity of structure-activity relationships which tend to be nonlinear in nature. As a result they are widely used in early-stage drug discovery and toxicity prediction.

However, if you are going deeper, you need to model accounting for more complexity. Enter GNNs. GNNs represent a flagship advancement in QSAR modeling because unlike traditional neural networks that operate on fixed-size inputs, GNNs are designed to process and learn from graph-structured data directly. In order to examine this let take a look at a typical architecture of a GNN:

- Node Embedding: Initial features are assigned to each atom (node) in the molecular graph. These could include atomic properties like element type, charge, or hybridization state.

- Message Passing: In each layer of the GNN, information is passed between neighboring nodes (atoms). This allows the network to update each atom’s representation based on its local chemical environment.

- Update Functions: After receiving messages from neighbors, each node’s representation is updated. This process can be thought of as refining the atom’s features based on its chemical context within the molecule.

- Readout Function: After several rounds of message passing, a readout function aggregates information from all nodes to create a single vector representation of the entire molecule. This global representation can then be used for tasks like property prediction or classification.

Interesting fact – GNNs are becoming increasingly used in areas where ‘linkages’ and relationships need to be explicitly mapped. One of its earliest applications is in social networking. In social networks, nodes can represent individuals, and edges can represent relationships or interactions. GNNs can effectively model the complex dependencies and patterns within these networks, such as predicting friendships, detecting communities, and even identifying influential users!

Let’s examine GNNs usage directly in drug discovery with a couple of bullet points:

- Direct Structural Representation: GNNs can take molecular graphs as input, where atoms are represented as nodes and chemical bonds as edges. This allows the model to work directly with the molecular structure, preserving important spatial and topological information that might be lost in linear representations.

- Hierarchical Feature Learning: GNNs learn representations of molecules in a hierarchical manner. They start by learning atom-level features, then progressively aggregate information to capture substructure and ultimately whole-molecule characteristics. This mimics the hierarchical nature of chemical structures and their properties.

- Invariance to Molecule Size: Unlike traditional neural networks that require fixed-size inputs, GNNs can handle molecules of varying sizes and complexities within the same model. This is particularly useful in drug discovery, where compounds can vary greatly in size and composition.

- Capturing Non-Linear Relationships: GNNs excel at capturing complex, non-linear relationships between molecular structure and activity. This is crucial in QSAR, where the relationship between structure and function is often highly complex and not easily described by simple linear models.

- End-to-End Learning: GNNs can learn relevant molecular features directly from raw molecular graphs, potentially eliminating the need for handcrafted molecular descriptors. This end-to-end learning approach can uncover subtle structural patterns that might be missed by predefined descriptors.

These attributes are cementing GNNs as the primary go-to-model in modern drug discovery.

Persistent Challenges in QSAR Modeling and Drug Discovery

Despite advancements in computational techniques, QSAR modeling and drug discovery continue to face significant challenges. Apart from the obvious computational costs required to run large scale QSAR models, a lot of it stems from things that can’t be directly solved using AI or machine learning. And revolves primarily around data quality and reproducibility.

The unreliability of published data on potential drug targets has become a critical problem in QSAR modeling and drug discovery. This issue is part of a broader trend of increasing retractions and misconduct in biomedical research. A comprehensive study analyzing 2,069 biomedical papers retracted between 2000 and 2021 from European institutions reveals alarming statistics. The overall retraction rate rose significantly from 10.7 per 100,000 publications in 2000 to 44.8 per 100,000 in 2020. Research misconduct accounted for a staggering 67% of these retractions, with the most common reasons being duplication, followed by unspecified research misconduct. This leads to a lack of reproducibility that not only wastes resources, but also misdirects future research efforts, potentially derailing drug discovery projects and delaying the development of new therapeutic agents. These problems are further exacerbated by the use of non-curated datasets that introduces multiple errors into QSAR modeling. These errors include the presence of wrong structures, misprints in compound names, incorrect calculation of descriptors, and the inclusion of duplicates, mixtures, and salts.

Alleviating these will require much more than the use of algorithms, it will require a comprehensive and systematic approach to data curation and validation. This approach should involve a multi-step workflow that progressively reduces the error rate while maintaining a substantial dataset size. Key steps in this process include rigorous chemical curation, duplicate analysis, assessment of intra- and inter-lab experimental variability, exclusion of unreliable data sources, and detection of activity cliffs. Furthermore, the development and validation of QSAR models should adhere to established guidelines, such as the OECD Principles. These principles emphasize the need for models to have a defined endpoint, an unambiguous algorithm, a clear domain of applicability, and appropriate measures of goodness-of-fit, robustness, and predictivity. Importantly, there should be a mechanistic interpretation of the model where possible, and the data used for modeling should be carefully curated. By implementing these stringent data curation protocols and adhering to established modeling principles, the field can significantly improve the reliability of QSAR models.

In conclusion, dramatic and exciting changes are taking place in drug discovery, but we will continue to see more challenges, with increasing complexity emerge. We will continue to explore different methods and new algorithms as and when they emerge. Stay tuned!

We help Biotech Startups with their AI

Neuralgap helps Biotech Startups bring experimental AI into reality. If you have an idea - but need a team to rapidly iterate or to build out the algorithm - we are here.