Enhancing ECG Interpretation Through Multi-Modal Integration

A Next-Generation Approach to Cardiac Diagnostics

Multimodal Transformers in Diagnostic Triage

Current ECG interpretation faces several well-documented challenges, as highlighted in the “Development of Interpretable Machine Learning Models” study. Their analysis revealed that even experienced interpreters can struggle with overlapping wave patterns, distinguishing between normal and atrial premature beats, and identifying fusion beats. The study used visual attention tracking to demonstrate how readers tend to overly focus on QRS complexes while potentially missing critical changes in ST segments and PR intervals. These challenges are further compounded by technical limitations including baseline wandering from patient movement and electrode contact issues, which can mask subtle but important abnormalities.

AI-assisted interpretation could help address these limitations by providing consistent attention across all ECG segments simultaneously. The study demonstrated that well-trained neural networks can maintain consistent analysis of all ECG components – from PR intervals through ST segments – without the attentional biases observed in human readers. Additionally, advanced AI models have shown the ability to compensate for technical variations and equipment limitations by learning to recognize patterns even in the presence of noise and baseline variations. This suggests AI could serve as a valuable second set of eyes, helping to catch subtle abnormalities that might be missed during routine human interpretation without replacing the critical role of clinical expertise.

Single Modality AI models

Single modality AI models focused solely on ECG signals have demonstrated remarkable capabilities in pattern recognition over the past decade. Most of the deep learning approaches have achieved binary classification accuracies of 94-99% for various cardiac conditions, with some models performing at or above cardiologist-level interpretation for specific arrhythmias. These models excel by breaking down ECG signals into key morphological components – analyzing PR intervals, QRS complexes, and ST segments simultaneously through complex neural network architectures, as demonstrated in multiple studies using the MIT-BIH database.

However, single modality approaches face significant limitations. For example, in one study, they can be fooled by subtle signal perturbations (with models showing 100% confidence in wrong classifications), struggle with technical variations in ECG recordings (showing 50-72% degradation in accuracy with powerline interference), and most critically, lack the broader clinical context that human interpreters naturally integrate. Research has shown that these models tend to overly focus on QRS complexes while potentially missing critical changes in other segments, highlighting the inherent risks of relying solely on ECG signal patterns for diagnosis.

Additionally, most of these models are focused on identifying specific cardiac abnormalities rather than one model detecting multiple cardiac abnormalities. Some approaches have focused on building multiclass classifiers to detect multiple cardiac abnormalities. Their performance is bounded at 72-78% range when it comes to multi-class classification problems. The primary challenge is the presence of class imbalance in most of the dataset. Because of that feature extractor is biased towards certain classes over others. The utilization of multiple datasets with multiple downstream tasks and training them together can create a robust feature extractor.

Complementing with Additional Modes

While single modality ECG models have shown impressive capabilities, the inherent limitations of relying solely on ECG signals highlight the need for more comprehensive approaches. Multimodal AI architectures have emerged as a promising solution, integrating diverse data streams to mirror the multifaceted approach used in clinical diagnosis.

Essentially, what we would like to do is to replicate (to a certain extent) the clinical diagnostic workflows by combining multiple concurrent data streams – from real-time physiological signals to longitudinal patient records. In AI, Multimodal learning (MML) integrates diverse data types, such as imaging, signals, genomic profiles, and electronic health records, to enhance diagnostic precision, particularly in cardiovascular abnormalities. Combining MML with multitask learning (MTL), helps address the challenges of rare conditions and enhances model performance even with limited data. However, current MML approaches face challenges, including the reliance on paired datasets and the complexity of integrating multiple modalities, which can hinder explainability.

To address these challenges, Neuralgap has pioneered a novel architecture called Multi-Uni-Model (MUM) for multimodal, multitask learning. This approach demonstrates significant performance improvements in cardiovascular disease prediction while modifying for smaller models (i.e. known as learnable parameters).

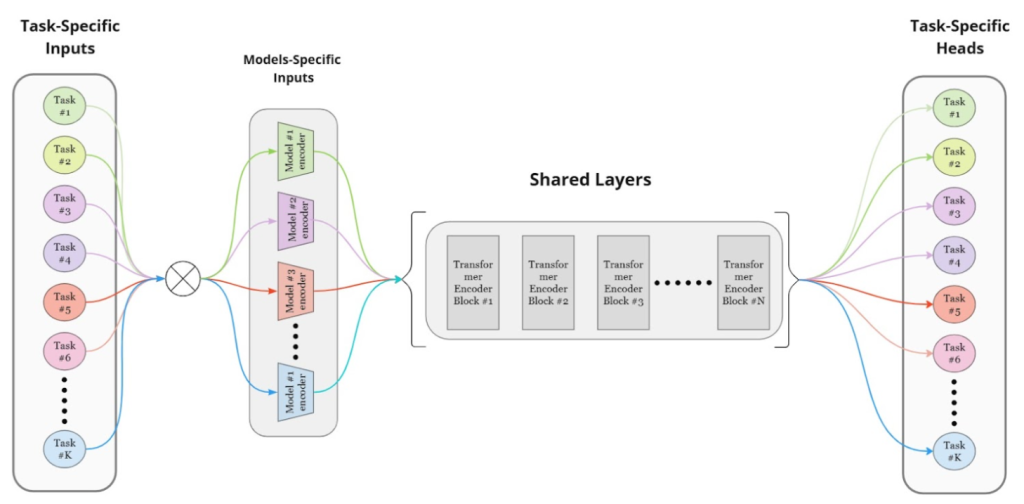

Our model – currently called Neo – is designed to process inputs from multiple modalities simultaneously through a by using i) modality-specific encoders for each type of data (imagine ECG and Echocardiogram), ii) shared transformer layers that process and fuse these embeddings (i.e. its searches for relationships across both these modalities) and iii) task-specific heads that generate final predictions (i.e. outputs). A high level model architecture diagram is shown in the figure below.

High-level architecture of Neuralgap’s Multi-Unimodal Model

Our model addresses issues like negative transfer and optimization difficulties by treating each task as an independent model with separate optimizers for task-specific and shared layers, allowing for independent updates. Our training strategy balances task updates to prevent catastrophic forgetting, using mini-batches from one task at a time and ensuring equal updates across tasks. This flexible and scalable approach facilitates the addition of new tasks or modalities without major architectural changes, enhancing diagnostic accuracy in biomedical applications.

Neo’s first version has been extensively validated across major cardiovascular datasets spanning multiple modalities:

- Icentia 11K for beat-level ECG classification

- MIMIC-IV-ECG for rhythm-level analysis

- EchoNet-Dynamic for LVEF prediction

- CirCor DigiScope for murmur detection

- CAMUS for ultrasound segmentation

The model was evaluated across 100 epochs and demonstrated superior performance compared to task-specific baselines, while maintaining lower computational complexity.

Our architecture also underscores the advantages of shared embeddings in recognizing underlying patterns across biosignals, medical images, medical videos, and bio audio. Its adaptability and versatility are evident in implementing various cardiovascular analysis tasks using a unified model. For example, Neo can handle complex tasks like identifying heart chamber boundaries in ultrasound images and calculating how well the heart is pumping (ejection fraction) – tasks that typically require separate specialized systems. This demonstrates the model’s ability to adapt to different types of cardiovascular assessments while maintaining accuracy.

The Multi-Uni-Model (MuM) represents significant advancements in medical diagnostics, particularly in cardiovascular disease prediction, where it has outperformed nearly all task-specific baseline models. Our model’s flexible architecture allows for adaptation to other body systems, positioning it as a promising solution for multitasking applications across multiple medical domains. We are now also extending a variant to Drug Discovery to help predict IC50 (prediction of dosage for target inhibition) based on multiple ligand descriptors).

The evolution from single-modality ECG interpretation to sophisticated multimodal analysis represents a crucial advancement in cardiovascular diagnostics. While traditional ECG interpretation services have provided valuable insights, the limitations of single-signal analysis – from pattern recognition challenges to technical vulnerabilities – highlight the need for more comprehensive approaches. MuM’s ability to seamlessly integrate multiple data streams while maintaining computational efficiency offers a compelling path forward, particularly for established ECG services looking to enhance their diagnostic capabilities without completely overhauling existing infrastructure. By bridging the gap between traditional ECG analysis and modern multimodal diagnostics, this approach not only improves accuracy but also provides a practical pathway for diagnostic services to evolve alongside advancing technology.

Also, a special shoutout to our exceptional AI team members Karthick Sharma and Mokeeshan Vathanakumar for their help in creating this powerful model!

Neuralgap NEO helps accelerate Diagnostic Triage

Neuralgap NEO is a multimodal transformer based model that uses our proprietary multi-unimodal architecture to help enterprises adapt new forms of medical data to help diagnose conditions early, fast and with high precision.

Please send us a message if you are interested in partnering with us.