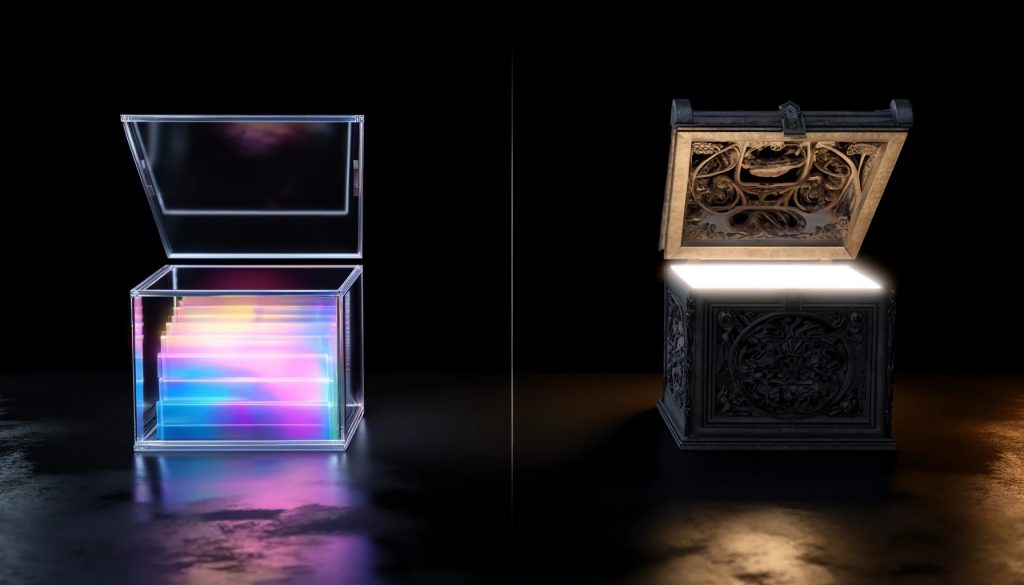

Battle of Open vs. Closed Source LLMs

The rapid advancements in Large Language Models (LLMs) have led to a divergence in the AI landscape, with two distinct camps emerging: open-source and closed-source LLMs. This dichotomy has sparked a heated debate about the future of AI development, with both approaches offering unique advantages and challenges. Open-source LLMs, exemplified by models like Stable Diffusion and BLOOM, prioritize transparency, collaboration, and customization. On the other hand, closed-source LLMs, such as GPT-4 and Anthropic’s Claude, focus on performance, safety, and commercial viability. As the battle between these two paradigms unfolds, it is crucial to examine their attributes and use cases to understand the implications for the AI ecosystem and its stakeholders.

A Quick Recap

Let’s recap up of some common concepts before we proceed;

Open Source

- Transparency – provide access to the model’s codebase, architecture, and training data, enabling researchers and developers to examine and understand the inner workings of the model. This transparency builds trust and allows for external audits, ensuring the model’s safety and fairness.

- Collaboration and Innovation – encourages collaboration among the AI community. Researchers and developers can build upon existing models, implement improvements, and adapt them for specific use cases..

- Customization and Flexibility – can fine-tune the models for domain-specific tasks, integrate them into existing pipelines, and modify the architecture to suit their unique requirements and niche applications.

Closed Source

- Performance and Scalability – The highest end models tend to deliver superior performance in terms of speed, accuracy,, and scalability. These models can handle large-scale deployments and provide consistent results across a wide range of applications.

- Safety and Security – prioritize safety and security, with strict controls over the model’s access and usage. Commercial providers invest heavily in implementing robust safety measures, such as content filtering, bias mitigation, and secure deployment environments

- Ease of Use and Support: Closed-source LLMs are often accompanied by well-documented APIs, and user-friendly interfaces. It’s all about reducing the technical barriers to entry and allows organizations to quickly integrate LLMs into their products and services without extensive in-house expertise.

- Liability and Accountability: Commercial providers of closed-source LLMs assume responsibility for the model’s performance, security, and compliance with regulations.

This accountability provides organizations with legal and operational assurances, mitigating risks associated with deploying AI systems in production environments.

Flexibility and Customization

Now let’s look at a couple of nuanced topics that tend to be less discussed in the circles – but require equal consideration.

Hallucination

- Open-source LLMs, particularly smaller models, are more prone to hallucination due to limited knowledge and training data, potentially generating irrelevant or inaccurate information. That being said, the open-source nature allows for iterative fine-tuning and expansion to mitigate this issue.

Fine-Tuning and Customization

- Open-source LLMs offer flexibility in customization, enabling organizations to tailor models to specific domains and use cases for improved accuracy and relevance.

- Fine-tuning open-source LLMs also allows the incorporation of domain-specific knowledge, enhancing performance in niche applications.

- In contrast, closed-source LLMs often have limited fine-tuning and customization options, with restricted depth and scope of modifications.

- This limitation can hinder the adaptation of closed-source LLMs to specific domains or use cases, leading to suboptimal performance or increased reliance on hallucination.

Foundation Model Performance

- The performance of foundation models, whether open-source or closed-source, significantly impacts their overall effectiveness.

- Closed-source LLMs, developed by well-resourced organizations, often have access to vast training data and computational resources, resulting in superior performance in accuracy, fluency, and coherence.

- Open-source foundation models may initially lag behind closed-source counterparts in performance due to resource and training data limitations.

- However, as open-source models gain traction and attract contributors, their performance can rapidly improve, narrowing the gap with closed-source alternatives.

Community and Ecosystem

- Open-source LLMs benefit from a vibrant community of developers, researchers, and users who contribute to the models’ improvement and expansion.

- The open-source ecosystem fosters collaboration, knowledge sharing, and rapid innovation, driving the advancement of LLM technology.

Transparency and Accountability

- Open-source LLMs provide transparency in their architecture, training data, and decision-making processes, enabling scrutiny and auditing by the community.

- This transparency promotes accountability and helps address concerns related to bias, fairness, and ethical considerations in LLM development and deployment.

Use case examples

Closed Source Model: Customer Support Chatbot for a Small E-commerce Business

- A small e-commerce business implements a closed-source LLM-powered chatbot to provide 24/7 customer support and improve customer satisfaction.

- Since the LLM is powerful and has inbuilt-filters, there is reasonable out-of-the-box deployment capability

- Moreover, the closed-source model’s performance and reliability are assured by the provider, enabling the small business to focus on its core operations without worrying about the chatbot’s maintenance or updates.

- The easy usage-based, mid-tier scalability means integration into the website and messaging platforms through a user-friendly API, requires minimal technical knowledge

- The closed-source model’s performance and reliability are assured by the provider, enabling the small business to focus on its core operations without worrying about the chatbot’s maintenance or updates.

Open Source Model: Identifying Rent Escalation Clauses in Commercial Lease Agreements

- A law firm specializing in commercial real estate transactions implements an open-source LLM to automate the identification of rent escalation clauses in lease agreements.

- The LLM is fine-tuned on a corpus of commercial lease agreements, with a specific focus on the language and structure of rent escalation clauses.

- The law firm’s in-house AI team collaborates with open-source developers to create custom pre-processing scripts and fine-tuning techniques that improve the LLM’s accuracy in identifying and extracting rent escalation clauses.

- When a new lease agreement is received, the fine-tuned LLM is applied to the document, scanning for specific keywords, phrases, and patterns associated with rent escalation clauses.

- The LLM identifies and extracts the relevant clauses, along with key information such as the base rent, escalation percentage, and frequency of rent increases.

- The extracted clauses and key information are then presented to the law firm’s attorneys for further review and analysis, enabling them to quickly assess the potential financial impact of the rent escalation terms on their clients.

- However, the law firm acknowledges that the open-source LLM, while effective in identifying rent escalation clauses, may struggle with more complex aspects of the clauses, such as understanding the interplay between rent escalation and other lease provisions or assessing the fairness of the escalation terms in the context of current market conditions.

Building a Competitive Moat

This hybrid approach enables organizations to leverage the strengths of both open-source and closed-source models, delivering unique value to their customers and stakeholders, and positioning themselves as leaders in their respective industries.

We help enterprises build competitive advantage in AI

Neuralgap helps enterprises build very complex and intricate AI models and agent architectures and refine their competitive moat. If you have an idea - but need a team to iterate or to build out a complete production application, we are here.